Docker setup¶

Docker¶

Docker is the definitive containerisation system for running applications.

Enable non-root access to the Docker daemon

(need to logout and back in for this to become active)Portainer¶

Portainer is a powerful GUI for managing Docker containers.

Create Portainer volume and then start Docker container, but for security bind port only to localhost, so that it cannot be accessed remotely except via an SSH tunnel.

Create portainer container

Setup SSH tunnel - example SSH connection string

SSH tunnel

Then connect using http://localhost:9000Go to Environments > local and add public IP to allow all the ports links to be clickable

Aim to put volumes in ~/containers/[containername] for consistency.

Watchtower¶

Watchtower is a container-based solution for automating Docker container base image updates.

Initial docker config setup

Watchtower can pull from public repositories but to link to a private Docker Hub you need to supply login credentials. This is best achieved by running a docker login command in the terminal, which will create a file in $HOME/.docker/config.json that we can then link as a volume to the Watchtower container. If this is not done prior to running the container then Docker will instead create the config.json file as a directory!

If 2FA enabled on Docker account then go to https://hub.docker.com/settings/security?generateToken=true to setup the access token

The configuration below links to this config file and also links to the local time and tells Watchtower to include stopped containers and verbose logging.

Warning

Remember to change the email settings below

bash

docker run --detach \

--name watchtower \

--volume /var/run/docker.sock:/var/run/docker.sock \

--volume $HOME/.docker/config.json:/config.json \

-v /etc/localtime:/etc/localtime:ro \

-e WATCHTOWER_NOTIFICATIONS=email

-e WATCHTOWER_NOTIFICATIONS_HOSTNAME=<hostname>

-e WATCHTOWER_NOTIFICATION_EMAIL_TO=<target email>

-e WATCHTOWER_NOTIFICATION_EMAIL_SERVER_PASSWORD=<password>

-e WATCHTOWER_NOTIFICATION_EMAIL_DELAY=2

-e WATCHTOWER_NOTIFICATION_EMAIL_FROM=<sending email>

-e WATCHTOWER_NOTIFICATION_EMAIL_SERVER=<mailserver>

-e WATCHTOWER_NOTIFICATION_EMAIL_SERVER_PORT=587

-e WATCHTOWER_NOTIFICATION_EMAIL_SERVER_USER=<maillogin>

containrrr/watchtower --include-stopped --debug

docker-compose/watchtower.yml

Run frequency

By default Watchtower runs once per day, with the first run 24h after container activation. This can be adjusted by passing the --interval command and specifying the number of seconds. There is also the option of using the --run-once flag to immediately check all containers and then stop Watchtower running.

Private Docker Hub images

Ensure any private docker images have been started as index.docker.io/<user>/main:tag rather than <user>/main:tag

Exclude containers

To exclude a container from being checked it needs to be built with a label set in the docker-compose to tell Watchtower to ignore it

To compare images with those on Docker Hub use docker images --digests to show the sha2566 hash.

Nginx Proxy Manager¶

Nginx Proxy Manager lets private containerised applications run via secure HTTPS proxies (including free Let's Encrypt SSL certificates).

Apply this docker-compose (based on https://nginxproxymanager.com/setup/#running-the-app) as a stack in Portainer to deploy:

docker-compose/nginx-proxy-manager.yml

Login to the admin console at <serverIP>:81 with email [email protected] and password changeme. Then change user email/password combo.

Setup new proxy host for NPM itself with scheme http, forward hostname of localhost and forward port of 81.

Force SSL access to admin interface

Once initial setup is completed, change & reload the NPM stack in Portainer to comment out port 81 so that access to admin interface is only via SSL.

Remember to change the Default Site in NPM settings for appropriate redirections for invalid subdomain requests.

Certbot errors on certificate renewal

In general using the custom image I created at image: 'jc21/nginx-proxy-manager:github-pr-3121' should resolve this issue.

If there is an error re a duplicate instance, check whether there are .certbot.lock files in your system.

If there are, you can remove them: (from https://community.letsencrypt.org/t/solved-another-instance-of-certbot-is-already-running/44690/2)After clearing the certbot lock, go through site by site and 1) disable SSL, 2) renew cert then 3) re-enable SSL (and all sub-options)

If mistakenly delete an old certificate first and get stuck with file not found error messages - then copy and existing known good folder across (e.g. cp -r /etc/letsencrypt/live/npm-1 /etc/letsencrypt/live/npm-7).

(If looking at Traefik instead then there's a reasonably helpful config guide that's worth looking at).

Dozzle¶

Nice logviewer application that lets you monitor all the container logs - https://dozzle.dev/

Apply this docker-compose as a stack in Portainer to deploy:

docker-compose/dozzle.yml

Add to nginx proxy manager as usual (forward hostname dozzle and port 8080), but with the addition of proxy_read_timeout 30m; in the advanced settings tab to minimise the issue of the default 60s proxy timeout causing repeat log entries.

To view log files that are written to disk create an alpine container and tail the log file to a shared volume Dozzle documentation. Also note the Docker maintains its own logs so with the weekly reset the container has to be recreated.

diskmonitor_stream.yml

Filebrowser¶

A nice GUI file browser - https://github.com/filebrowser/filebrowser

Create the empty db and file structure first

Then create /containers/filebrowser/settings.json

Then install via docker-compose:

docker-compose/filebrowser.yml

Then setup NPM SSH reverse proxy (remember to include websocket support, with forward hostname filebrowser and port 80) and then login:

Default credentials

Username: admin

Password: (unique, created when DB first created - check logs)

To customise the appearance change the instance name (e.g.,

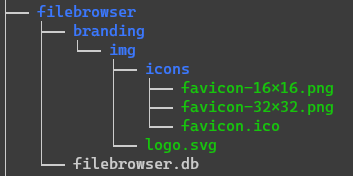

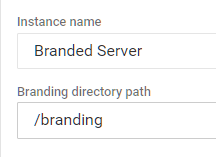

To customise the appearance change the instance name (e.g., Deployment server) and set the branding directory path (e.g., /branding) in Settings > Global Settings. Then create img and img/icons directories in the previously created containers/filebrowser/branding directory and add the logo.svg and favicon.ico and 16x16 and 32x32 PNGs (if you only do the .ico) then the browser will pick the internal higher resolution PNGs.

Generating favicons

The favicon generator is a very useful website to generate all the required favicons for different platforms.

Optional containers¶

HTTP Captive Portal¶

This is a self-hosted website available via plain HTTP (as well as HTTPS) to help trigger the login splash page for captive login portals when travelling (e.g., hotel, airplane, etc.).

It displays the IP address & approximate location the user is connecting from as well as local and server date/time.

Originally it was designed to just serve HTTP however mobile browsers would refuse to load this so instead have an HTTPS option that uses a either a self-generated certificate or can user a certificate from CloudFlare (on their website go to SSL/TLS -> Origin Server -> Create Certificate), just run the script to generate self-signed certificates and then replace them with the CRT/PEM files from CloudFlare.

When proxying via CloudFlare the origin IP address will be hidden, however it is passed in the header - the nginx.conf will automatically adjust for this and replace the IP with this one for traffic originating from CloudFlare IPs.

Install via docker-compose:

docker-compose/http-captive-portal.yml

In the container folder add the following files:

Run generate-cert.sh (after chmod +x) to generate the self-signed certs, you can then replace these files (keeping the same filenames) with the CloudFlare ones if desired.

Bytestash¶

A handy site for storing code snippets - https://github.com/jordan-dalby/ByteStash

Install via docker-compose:

docker-compose/bytestash.yml

IT-Tools¶

A collection of handy dev tools with a very nice UX - https://github.com/CorentinTh/it-tools – most of them are things that could be found online but nice to have it in one easily searchable place that you know only runs locally and doesn’t give anything to a third-party.

Example tools: generate GUIDs; convert colours, date/time, case, Unicode, YAML/JSON/XML, Markdown to HTML; encode/decode URL strings; parse JWT; quick HTML WYSIWYG editor; JSON and text diff; HTTP status code reference; QR code generator; crontab generator/validator; JSON prettify & minify; JSON to CSV; SQL prettify/format; Docker run to compose converter; Regex cheatsheet & tester; IPv4 subnet calculator; Lorem ipsum generator; emoji picker 😃; plus many more!

Install via docker-compose:

docker-compose/it-tools.yml

Stirling PDF¶

A Swiss army knife for interacting with PDFs - https://www.stirlingpdf.com/

Install via docker-compose:

docker-compose/stirling-pdf.yml

Uptime Kuma monitoring¶

A nice status monitoring app - https://github.com/louislam/uptime-kuma

Install via docker-compose:

docker-compose/uptime-kuma.yml

Join to bridge network post setup if required

Remember docker-compose can only join the new container to one network, so need to manually add to bridge network afterwards if you also want to monitor containers that aren't on the nginx-proxy-manager_default network so use the following command (or add network via Portainer):

NextCloud¶

Cloud-hosted sharing & collaboration server - https://hub.docker.com/r/linuxserver/nextcloud and https://nextcloud.com/

Install via docker-compose:

docker-compose/nextcloud.yml - remember to change host directories if required

The setup NPM SSH reverse proxy to https port 443 and navigate to new site to setup login.

Setup 2FA

After login go to User > Settings > Security (Administration section) > Enforce two-factor authentication.

Then go User > Apps > Two-Factor TOTP Provider (https://apps.nextcloud.com/apps/twofactor_totp) or just click on search icon at the top right and type in TOTP

Then go back to User > Settings > Security (Personal section) > Tick 'Enable TOTP' and verify the code

Glances¶

System monitoring tool - https://nicolargo.github.io/glances/

Install via docker-compose:

docker-compose/glances.yml

Then setup NPM SSH reverse proxy to https port 443 and navigate to the new site to setup login.

Webtop¶

'Linux in a web browser' https://github.com/linuxserver/docker-webtop

Install via docker-compose:

docker-compose/webtop.yml

Then setup NPM SSH reverse proxy to port 3000 and navigate to the new site.

MeshCentral¶

Self-hosted remote access client - https://github.com/Ylianst/MeshCentral & https://meshcentral.com/info/

Install via docker-compose:

docker-compose/meshcentral.yml

See NGINX section of the user guide (p34 onwards) for more information about configuring to run alongside NPM, however the key thing to note that a fixed IP address needs to be specified in the docker-compose file for NPM - as an example in the docker-compose sample file on this site is set to 172.19.0.100. The MeshCentral configuration file needs to reflect this accordingly. If this is not done the NPM container will be auto-allocated a new IP when it is re-created (e.g., for when there is an update) and MeshCentral then will run into SSL errors as it can't validate the certificate that has been passed through...

Log error with IP mismatch with configuration.

02/25/2023 2:52:11 PM

Installing [email protected]...

02/25/2023 2:52:25 PM

MeshCentral HTTP redirection server running on port 80.

02/25/2023 2:52:25 PM

MeshCentral v1.1.4, WAN mode, Production mode.

02/25/2023 2:52:27 PM

MeshCentral Intel(R) AMT server running on remote.alanjrobertson.co.uk:4433.

02/25/2023 2:52:27 PM

Failed to load web certificate at: "https://172.19.0.14:443/", host: "remote.alanjrobertson.co.uk"

02/25/2023 2:52:27 PM

MeshCentral HTTP server running on port 4430, alias port 443.

02/25/2023 2:52:48 PM

Agent bad web cert hash (Agent:68db80180d != Server:c68725feb5 or 9259b83292), holding connection (172.19.0.11:44332).

02/25/2023 2:52:48 PM

Agent reported web cert hash:68db80180d05fce0032a326259b825c76f036593c62a8be0346365eb5540a395dbfae31d8cade3f2a4370c29c2563c27.

02/25/2023 2:52:48 PM

Failed to load web certificate at: "https://172.19.0.14:443/", host: "remote.alanjrobertson.co.uk"

02/25/2023 2:52:48 PM

Agent bad web cert hash (Agent:68db80180d != Server:c68725feb5 or 9259b83292), holding connection (172.19.0.11:44344).

02/25/2023 2:52:48 PM

Agent reported web cert hash:68db80180d05fce0032a326259b825c76f036593c62a8be0346365eb5540a395dbfae31d8cade3f2a4370c29c2563c27.

02/25/2023 2:53:18 PM

Agent bad web cert hash (Agent:68db80180d != Server:c68725feb5 or 9259b83292), holding connection (172.19.0.11:52098).

02/25/2023 2:53:18 PM

Agent reported web cert hash:68db80180d05fce0032a326259b825c76f036593c62a8be0346365eb5540a395dbfae31d8cade3f2a4370c29c2563c27.

02/25/2023 2:54:03 PM

Agent bad web cert hash (Agent:68db80180d != Server:c68725feb5 or 9259b83292), holding connection (172.19.0.11:53218).

02/25/2023 2:54:03 PM

Agent reported web cert hash:68db80180d05fce0032a326259b825c76f036593c62a8be0346365eb5540a395dbfae31d8cade3f2a4370c29c2563c27.

Edit ~/containers/meshcentral/data/config.json to replace with the following. Remember that items beginning with an underscore are ignored.

config.json - remember to edit highlighted lines to ensure the correct FQDN and NPM host are specified

Then setup NPM SSH reverse proxy to port 4430 (remember to switch on websocket support) and navigate to the new site.

If running with Authelia then add new entries into the configuration file there too so that the agent and (for remote control) the meshrelay and (for setup of the agents and settings) the invite download page can all bypass the authentication but that the main web UI is under two factor:

- domain: remote.alanjrobertson.co.uk

resources:

# allow agent & agent invites to bypass

- "^/agent.ashx([?].*)?$"

- "^/agentinvite([?].*)?$"

# allow mesh relay to bypass (for remote control, console, files) and agents to connect/obtain settings

- "^/meshrelay.ashx([?].*)?$"

- "^/meshagents([?].*)?$"

- "^/meshsettings([?].*)?$"

# allow files to be downloaded

- "^/devicefile.ashx([?].*)?$"

# allow invite page for agent download to be displayed

- "^/images([/].*)?$"

- "^/scripts([/].*)?$"

- "^/styles([/].*)?$"

policy: bypass

- domain: remote.alanjrobertson.co.uk

policy: two_factor

Golang option)

Login to MeshCentral and set up an initial account. Then add a new group and download and install the agent. Once installed you will see it show up in MeshCentral and will be able to control/access remotely. There is also the option to download an Assistant (that can be branded) that users can then run once (doesn't require elevated privileges; also can run the Assistant with -debug flag to log if any issues).

To setup custom images for hosts, run the following commands:

docker exec -it meshcentral /bin/bash

cp -r public/ /opt/meshcentral/meshcentral-web/

cp -r views/ /opt/meshcentral/meshcentral-web/

Add AV exception

It is like an exception needs to be added to AV software for C:\Program Files\Mesh Agent on the local machine (certainly is the case with Avast).

Netdata¶

System monitoring tool - https://www.netdata.cloud/

Install via docker-compose:

docker-compose/netdata.yml

The setup NPM SSH reverse proxy to https port 443 and navigate to new site to view login. Also option of linking to online account - need to get login token from website and change stack to include this in the environment variables.

YOURLS¶

Link shortner tool with personal tracking - https://yourls.org

Setup structure prior to deploying stack/docker compose to avoid directories having root ownership or files being set as directories:

setup commands

Copy my.cnf file contents to ~/containers/yourls/my.cnf - this reduces RAM usage from ~233MB down to 44MB

~/containers/yourls/my.cnf

# The MariaDB configuration file

#

# The MariaDB/MySQL tools read configuration files in the following order:

# 0. "/etc/mysql/my.cnf" symlinks to this file, reason why all the rest is read.

# 1. "/etc/mysql/mariadb.cnf" (this file) to set global defaults,

# 2. "/etc/mysql/conf.d/*.cnf" to set global options.

# 3. "/etc/mysql/mariadb.conf.d/*.cnf" to set MariaDB-only options.

# 4. "~/.my.cnf" to set user-specific options.

#

# If the same option is defined multiple times, the last one will apply.

#

# One can use all long options that the program supports.

# Run program with --help to get a list of available options and with

# --print-defaults to see which it would actually understand and use.

#

# If you are new to MariaDB, check out https://mariadb.com/kb/en/basic-mariadb-articles/

#

# This group is read both by the client and the server

# use it for options that affect everything

#

[client-server]

# Port or socket location where to connect

# port = 3306

socket = /run/mysqld/mysqld.sock

# Import all .cnf files from configuration directory

!includedir /etc/mysql/mariadb.conf.d/

!includedir /etc/mysql/conf.d/

[mysqld]

#max_connections = 100

max_connections = 10

connect_timeout = 5

wait_timeout = 600

max_allowed_packet = 16M

#thread_cache_size = 128

thread_cache_size = 0

#sort_buffer_size = 4M

sort_buffer_size = 32K

#bulk_insert_buffer_size = 16M

bulk_insert_buffer_size = 0

#tmp_table_size = 32M

tmp_table_size = 1K

#max_heap_table_size = 32M

max_heap_table_size = 16K

#

# * MyISAM

#

# This replaces the startup script and checks MyISAM tables if needed

# the first time they are touched. On error, make copy and try a repair.

myisam_recover_options = BACKUP

#key_buffer_size = 128M

key_buffer_size = 1M

#open-files-limit = 2000

table_open_cache = 400

myisam_sort_buffer_size = 512M

concurrent_insert = 2

#read_buffer_size = 2M

read_buffer_size = 8K

#read_rnd_buffer_size = 1M

read_rnd_buffer_size = 8K

#

# * Query Cache Configuration

#

# Cache only tiny result sets, so we can fit more in the query cache.

query_cache_limit = 128K

#query_cache_size = 64M

query_cache_size = 512K

# for more write intensive setups, set to DEMAND or OFF

#query_cache_type = DEMAND

#

# * Logging and Replication

#

# Both location gets rotated by the cronjob.

# Be aware that this log type is a performance killer.

# As of 5.1 you can enable the log at runtime!

#general_log_file = /var/log/mysql/mysql.log

#general_log = 1

#

# Error logging goes to syslog due to /etc/mysql/conf.d/mysqld_safe_syslog.cnf.

#

# we do want to know about network errors and such

#log_warnings = 2

#

# Enable the slow query log to see queries with especially long duration

#slow_query_log[={0|1}]

slow_query_log_file = /var/log/mysql/mariadb-slow.log

long_query_time = 10

#log_slow_rate_limit = 1000

#log_slow_verbosity = query_plan

#log-queries-not-using-indexes

#log_slow_admin_statements

#

# The following can be used as easy to replay backup logs or for replication.

# note: if you are setting up a replication slave, see README.Debian about

# other settings you may need to change.

#server-id = 1

#report_host = master1

#auto_increment_increment = 2

#auto_increment_offset = 1

#log_bin = /var/log/mysql/mariadb-bin

#log_bin_index = /var/log/mysql/mariadb-bin.index

# not fab for performance, but safer

#sync_binlog = 1

expire_logs_days = 10

max_binlog_size = 100M

# slaves

#relay_log = /var/log/mysql/relay-bin

#relay_log_index = /var/log/mysql/relay-bin.index

#relay_log_info_file = /var/log/mysql/relay-bin.info

#log_slave_updates

#read_only

#

# If applications support it, this stricter sql_mode prevents some

# mistakes like inserting invalid dates etc.

#sql_mode = NO_ENGINE_SUBSTITUTION,TRADITIONAL

#

# * InnoDB

#

# InnoDB is enabled by default with a 10MB datafile in /var/lib/mysql/.

# Read the manual for more InnoDB related options. There are many!

default_storage_engine = InnoDB

# you can't just change log file size, requires special procedure

#innodb_log_file_size = 50M

#innodb_buffer_pool_size = 256M

innodb_buffer_pool_size = 10M

#innodb_log_buffer_size = 8M

innodb_log_buffer_size = 512K

innodb_file_per_table = 1

innodb_open_files = 400

innodb_io_capacity = 400

innodb_flush_method = O_DIRECT

#

# * Security Features

#

# Read the manual, too, if you want chroot!

# chroot = /var/lib/mysql/

#

# For generating SSL certificates I recommend the OpenSSL GUI "tinyca".

#

# ssl-ca=/etc/mysql/cacert.pem

# ssl-cert=/etc/mysql/server-cert.pem

# ssl-key=/etc/mysql/server-key.pem

#

# * Galera-related settings

#

[galera]

# Mandatory settings

#wsrep_on=ON

#wsrep_provider=

#wsrep_cluster_address=

#binlog_format=row

#default_storage_engine=InnoDB

#innodb_autoinc_lock_mode=2

#

# Allow server to accept connections on all interfaces.

#

#bind-address=0.0.0.0

#

# Optional setting

#wsrep_slave_threads=1

#innodb_flush_log_at_trx_commit=0

[mysqldump]

quick

quote-names

max_allowed_packet = 16M

[mysql]

#no-auto-rehash # faster start of mysql but no tab completion

[isamchk]

key_buffer = 16M

#

# * IMPORTANT: Additional settings that can override those from this file!

# The files must end with '.cnf', otherwise they'll be ignored.

#

# As this lives in the container's conf.d directory, the includes would start a recursive loop, so comment them out

#!include /etc/mysql/mariadb.cnf

#!includedir /etc/mysql/conf.d/

Install via docker-compose:

docker-compose/yourls.yml

Note that after installation the root directory will just show an error - this is by design!

Instead you need to go to domain.tld/admin to access the admin interface. On first run click to setup the database then login using the credentials that were pre-specified in the docker-compose file.

Invalid username/password issues

Note that when parsing the password from the stack to pass in an environment variable there can be issues with special characters (mainly $). You can check what has been passed as a parsed environment variable by looking at the container details in Portainer.

It is also possible to check in ~/containers/yourls/users/config.php - before logging into the admin console this will show in cleartext at line 75 (press Alt-N in nano to show line numbers). After login it will be encrypted

YOURLS has an extensible architecture - any plugins should be downloaded and added to subdirectories within ~/containers/yourls/plugins - see preview and qrcode as examples with setup instructions (although Preview URL with QR code is actually a nicer combination option to install that those separate ones - one installed just append a ~ to the shortcode to see the preview). Once plugins have been copied into place, go to the admin interface to activate them.

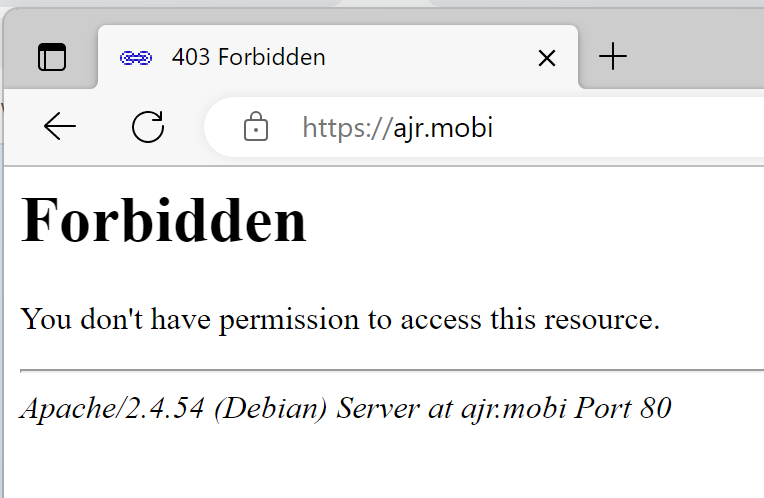

As mentioned, by default accessing the root directory (domain.tld) or an incorrect shortcode will display a 403 error page (as the latter just redirects to the root). Place an index.(html|php) file in the ~/containers/yourls/html directory of the host (volume is already mapped in the stack/docker-compose file) to replace this.

example index.html with background image and centred text

<html lang="en" translate="no">

<head>

<meta name="google" content="notranslate">

<link rel="preconnect" href="https://fonts.googleapis.com">

<link rel="preconnect" href="https://fonts.gstatic.com" crossorigin>

<link href="https://fonts.googleapis.com/css2?family=Courier+Prime&display=swap" rel="stylesheet">

<title>ajr.mobi</title>

<style>

* {

margin: 0;

padding: 0;

}

html {

background: url(bg.jpg) no-repeat center center fixed;

-webkit-background-size: cover;

-moz-background-size: cover;

-o-background-size: cover;

background-size: cover;

}

.align {

display: flex;

height: 100%;

align-items: center;

justify-content: center;

font-family: 'Courier Prime', monospace;

color: linen;

font-size: 350%;

}

</style>

</head>

<body><div class="align">ajr.mobi</div></body>

</html>

Don't map the whole /html directory as a Docker volume

If the whole /html directory is mapped then when a new YOURLS Docker image is released it will not be able to update correctly - any file in the mapped volume /var/www/html takes precedence to new application file, to avoid any unexpected override. Thus, the previous version's files are still used. The solution is to only map the plugins directly (which will be empty anyway) and the index.html ± background image.

If a simple redirect to another page is required then instead just create an index.php with the following code:

example ~/containers/yourls/html/index.php redirect

You can change the favourites icon shown in the browser tab for the index - there are nice generators for these from text/emoji or from Font Awesome icons. Place the newly generatored favicon files in ~/containers/yourls/favicon - the Docker compose file above maps the contents of this to the /var/www/html directory within the container.

You can also insert PHP pages into the /pages directory to create pages accessible via shortcode - see the YOURLS documentation for more information.

Homepage options¶

https://github.com/bastienwirtz/homer

Install via docker-compose (stack on Portainer):

docker-compose/homer.yml

https://github.com/linuxserver/Heimdall

Install via docker-compose (stack on Portainer):

docker-compose/heimdall.yml

https://github.com/Lissy93/dashy

DO NOT USE IF RAM <1GB

Build fails unless higher RAM levels, leading to high CPU and swap usage.

See discussion at https://github.com/Lissy93/dashy/issues/136

Create the empty db file first

Install via docker-compose (stack on Portainer):

docker-compose/dashy.yml

Matomo¶

Self-hosted analytics platform - https://matomo.org/

Install via docker-compose (stack on Portainer):

docker-compose/matomo.yml

Then setup in NPM as usual with SSL and add the usual Authelia container advanced config.

Once this is done access Matomo via the new proxy address and follow the click-through setup steps. Database parameters should already be pre-filled (from the environment variables above), the main step is just to setup a superadmin user. After this the setup process will generate the tracking code that has to be placed just before the closing </head> tag (or in the relevant Wordpress configuration). This tracking code needs to be able to access the matomo.php and matomo.js files without authentication, so the following has to be added to the Authelia configuration:

add to access_control section of ~/containers/authelia/config/configuration.yml

PrivateBin¶

A minimalist, open source online pastebin where the server has zero knowledge of pasted data - https://privatebin.info/

Install via docker-compose (stack on Portainer):

docker-compose/privatebin.yml

Then create an npm certificate/reverse proxy redirect.

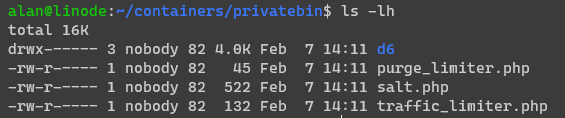

Fix directory permission issues

By default the new folder is owned by root, however even if created by the logged in user prior to creating the container the web application is still unable to write to the directory - we need to change this as follows:

If having issues fixing directory permissions then more information on how to work it out

From https://ppfeufer.de/privatebin-your-self-hosted-pastebin-instance/

- First make the

privatebindirectory globally writeable withsudo chmod 777 privatebin-data/ - Now open PrivateBin and create a paste. If you then do

ls -lhthis will show the user - normally usernobodyand group82(see screenshot)

- Now change directory ownership with

sudo chown -R nobody:82 privatebin/ - Now revert directory restrictions with

sudo chmod 700 privatebin/

Administration commands

There is an administration capability built-in to PrivateBin.

This can be accessed by opening a terminal into the container and then cd /bin followed by:

administration --helpdisplay help infoadministration --statisticsshow statsadministration --purgepurge expired pastesadministration --delete <pasteID>delete specified paste

Docker Compose files for existing containers¶

It is possible to easily generate a Docker Compose file for a container that has been started via the command line - see https://github.com/Red5d/docker-autocompose